Sensing

Each pixel on the sensor converts light energy into an electrical charge, proportional to the intensity of the light, in a similar manner to the operation of solar panels. Each pixel can be imagined as a bucket, where photons are grains of sand falling into the buckets, and then counted to achieve image detection.

Photodiode

A photodiode is a PN junction semiconductor device in which light generates electron-hole pairs, creating a measurable charge or current. In image sensors, a reverse-biased photodiode acts as a charge-integrating element. During image capture, electrons accumulate, and the stored charge is determined by the junction capacitance of the photodiode and the associated potential well, behaving like a capacitor.

CCD sensor

The name is short for Charge-Coupled Device. Photodiodes convert light into charge, then at one of the edges of the sensor the charge of each pixel is read. Their charge is passed on to each other in the same way that people form a chain to pass sandbags during a flood. CCD technology came first, followed by CMOS.

CMOS sensor

Unlike CCDs, CMOS sensors contain 3 (3T) or 4 (4T) transistors next to the photodiode for each pixel, and the charge is processed locally. CMOS sensors are more efficient, cheaper to mass-produce, can capture images faster, could implement noise filtering as SoCs and might perform basic image processing. Different CMOS technologies include DPAF, BSI CMOS or Stacked CMOS.

Rolling shutter (CMOS)

When recording video and therefore electronic shutter is used, the vertical, non-immediate reading of the camera sensors produces a distorted phenomenon called rolling shutter. This can be avoided using a global shutter camera, but sensors that can do that are expensive and low resolution. Since distortion is significant for fast-moving, rotating subjects, industrial vision systems almost exclusively use GS cameras.

Interlaced Scan

At a given resolution and frame rate, a frame can be transmitted so that all information is transmitted at each step, this is Progressive Scan. In the past, however, due to technological limitations, the Interlaced Scan method was often used, which transmits only even or odd lines at a time. Half of each frame was thus one frame behind. Until around the early 2000s, both methods were used in parallel. News, for example, were often broadcasted in 1080i, while action movies or sports broadcasts were transmitted in 720p to show movement more clearly. Today, Interlaced Scan has almost disappeared from all areas of technology.

Definition of noise

Noise is the unwanted modification of a signal, which may occur during AD conversion, signal transmission, or signal processing. Noise causes the image to be grainy, as neighbouring pixels, which are usually the same color or similar, differ significantly. The effect is most noticeable in homogeneous areas.

Sources of noise

When it rains, raindrops fall randomly, just like the photons measured by the image sensor. Because of this, there will be a discrepancy between repeated intensity measurements, which will appear as noise.

The noise from photons is independent of the sensor, but the noise introduced while the signal is converted to a voltage or quantized is caused by the sensor. Modern 12-16 bit ADCs are good enough that quantization noise is minimal. FPN, or Fixed Pattern Noise, is noise due to constant pixel differences, but todays sensors are able to solve this problem, as well (4T pixel architecture, Correlated Double Sampling).

Noise is also generated when the sensor heats up. The silicon chips generate spontaneous electrons, which is also not uniform, and produces a noisy image. Astronomical sensors are cooled with liquid nitrogen for this reason. This type of noise is usually only visible when using long exposure times

Dynamic range

The ratio between the highest and lowest measurable photon energy is called dynamic range. The higher this ratio, the better the quality of the imge sensor. This phenomenon is obvious when details of shadowy areas are lost when capturing a bright subject or bright areas are burnt out in a low-light scenario. The dynamic range of the human eye is also not infinite, if it was, it would never have to "get used" to light.

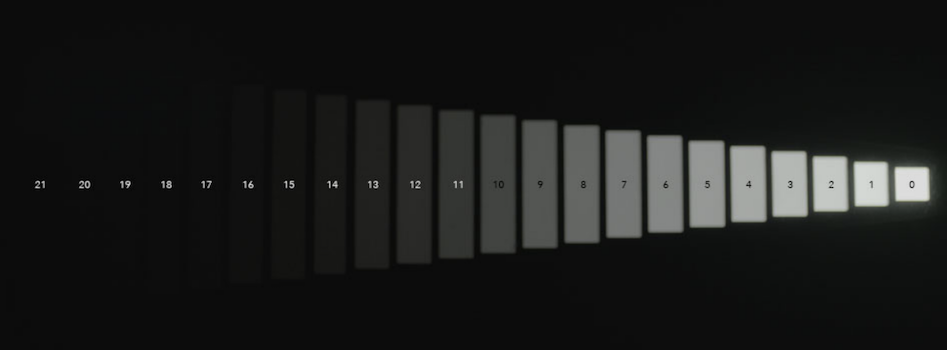

Dynamic range can be measured with specialized instruments using a dedicated light source, such as the XYLA Test Chart. It can be expressed in multiple ways, either as a ratio by definition, or in decibels, or exposure values. Industrial cameras might use decibels, cinema cameras use EV, and for consumer products (cameras, smart devices) it is usually not indicated.

XYLA Test Chart (ALEXA 35):

# ARRI Dynamic Range - https://www.arri.com/en/learn-help/learn-help-camera-system/technical-downloads

$ \dfrac{E_{max}}{E_{min}} $

$ 20 ⋅ lg \left( \dfrac{E_{max}}{E_{min}} \right) $

$ log_2 \left( \dfrac{E_{max}}{E_{min}} \right) $

| Device | Ratio | dB | Exposure value |

| Human eye | 1000000:1 | 120 | 20 |

| ARRI ALEXA 35 | 130000:1 | 102 | 17 |

| Apple iPhone 15 Pro | 2048:1 | 66 | 11 |

| Panasonic GH1 (2009) | 256:1 | 48 | 8 |

| Panasonic GH6 (2022) | 4096:1 | 72 | 12 |